Replication to NVMe over Fabrics Target

Sometimes the data on the disk might be lost in some reason (i.e. hardware damage), we can make a real-time backup for a specified volume by creating an synchronous / asynchronous replication service. The feature enabling you to create mirror backup from volume to SAN service like iSCSI, iSER and NVMe-oF (NVMe over Fabrics) target. The benefits of asynchronous replication is no effecting I/O speed of source volume.

1. Create Asynchronous /synchronous Replication to NVMe-oF (NVMe over Fabrics) Target:

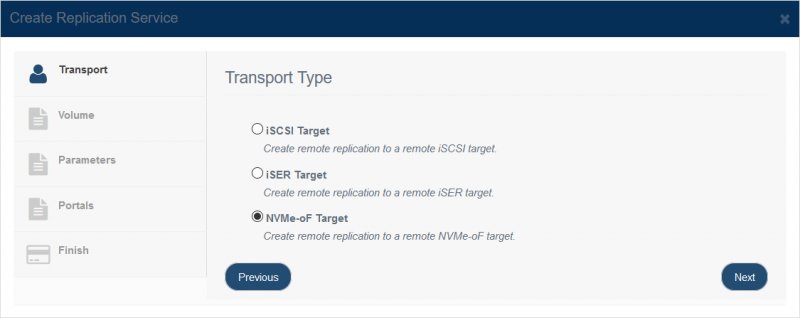

Step 1. Select Replications in the left panel and click the Add button on the top toolbar of the management system, then the Create Replication Service wizard popup up.

Choose NVMe-oF Target in the Transport Type.

Press the Next button to continue.

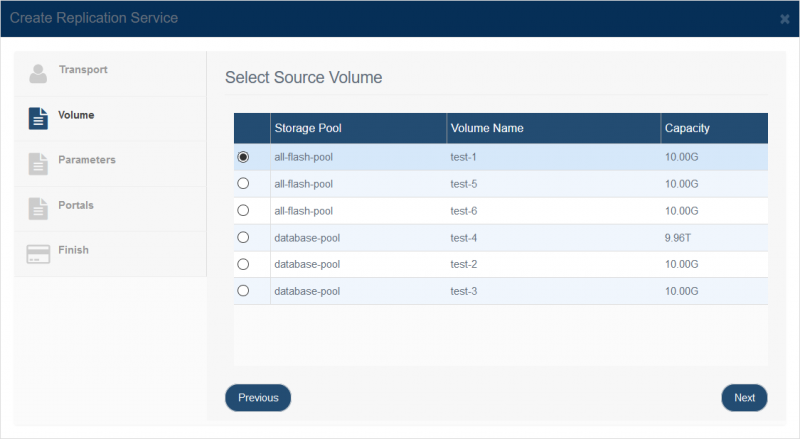

Step 2. Select the source volume where user wants to create the remote replication

Choose one volume.

Press the Next button to continue.

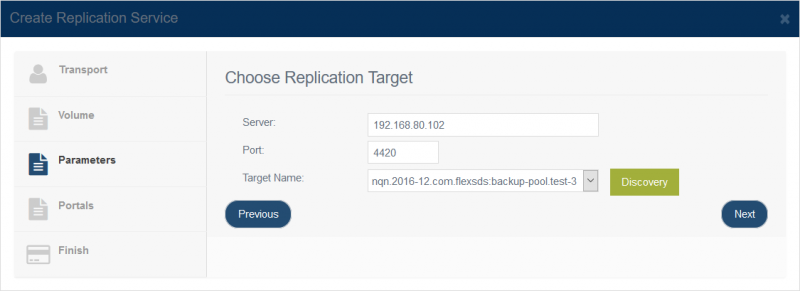

Step 3. Discover remote NVMe-oF target.

After you input the host name and port of the remote NVMe over Fabrics server.

Press the Discovery button, it will show you all the NVMe over Fabrics targets on the remote server, select one as remote mirror device.

Then press the Next button to continue.

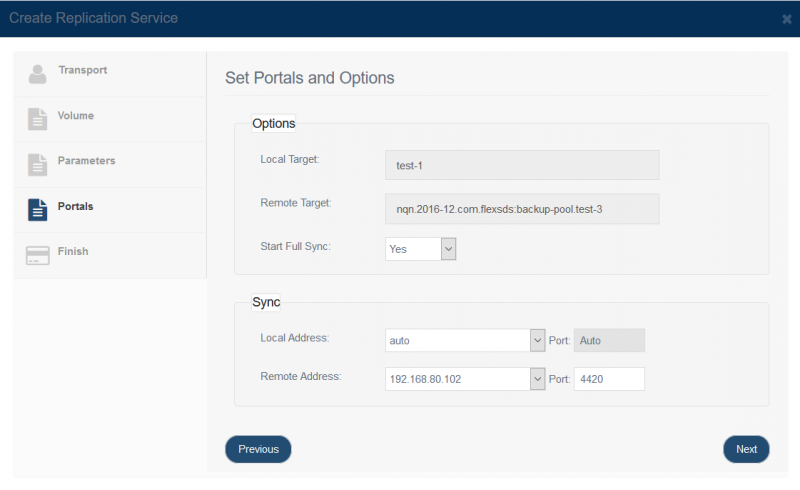

Step 4. Setting Portals and Options of replication.

To make the synchronous /asynchronous replication device has full synchronization form base volume and remote NVMe-oF target select the ‘Full Sync‘, otherwise, select ‘No Sync‘ for blank volume (new created) or want sync later.

Specify local and remote portal pairs (IP address and port).

Note: All data on the mirror device will be destroyed after synchronization.

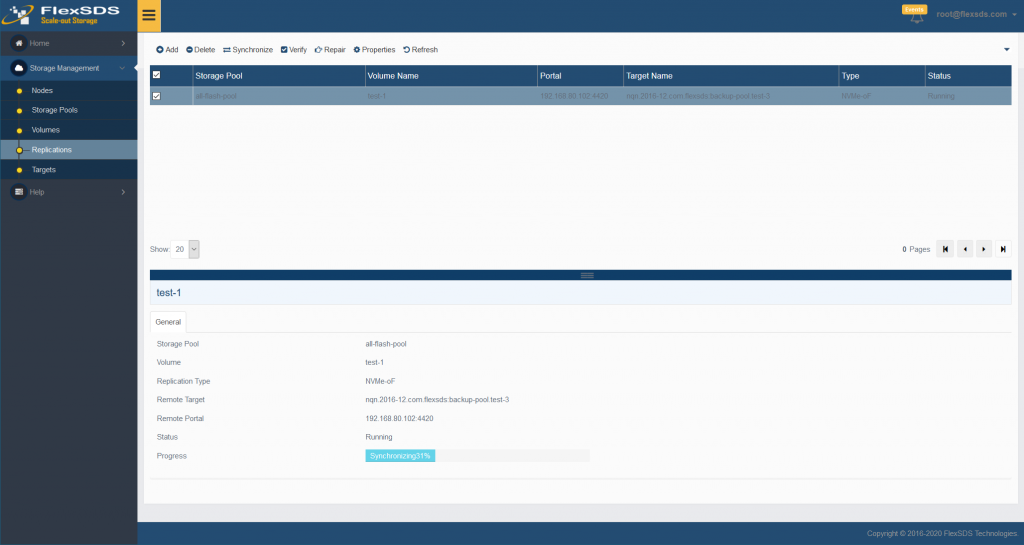

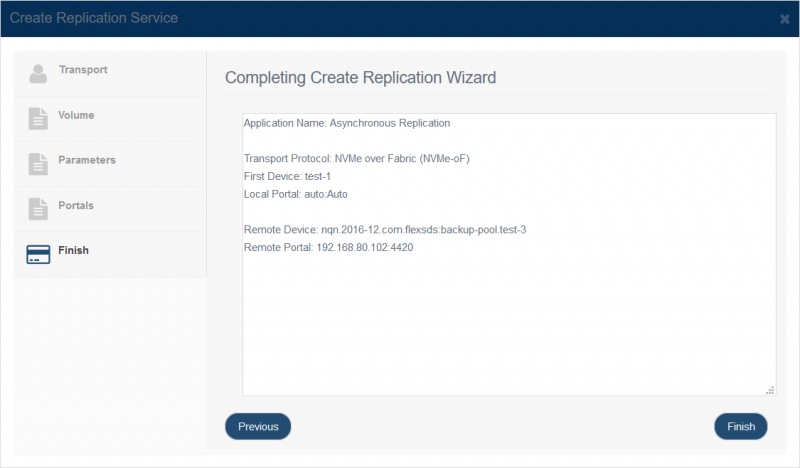

Step 5. Complete Replication Creation

If any change is required press the Back button, otherwise, press the Finish button to complete mirror application creation, after successfully created, the replication service will shown in the main interface.