Install FlexSDS Scale-out Software Software is very easy, please refer to the FlexSDS user’s manual for setting up and deployment.

FlexSDS released high performance optimized SDS storage software stack for hybrid and all flash servers

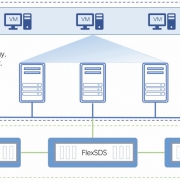

/in Press Release /by adminOct 01, 2020, FlexSDS, the global recognized in high performance software defined storage period, has announced a new release of it’s distributed scale-out storage software stack 2020 V4 for hybrid and all flash servers, FlexSDS software defined storage is now available for End-Users and OEM partners in all around of the world.

FlexSDS brings its own developed core service which is a high performance optimized, lock-free scheduler (like a OS) to manage all resources in the server includes: CPU, memory, storage, PCI devices and numa, which is working in polling mode and make full usage of hardware resource to perform maximum performance for storage service.

FlexSDS brings user mode NVMe driver (Kernel-by-pass) to support directly attached, RAID pool and SDS Pool, SDS Pool allows to create multiple volumes to be exposed as high availability, snapshot enabled iSCSI target, iSER target or NVMe-oF target, FlexSDS brings the ability to run any application at any scale, fast time-to-value, and non-disruptive scale-up or scale-out.

FlexSDS is a software-only solution that runs on industry-standard hardware (any x86 based machines). It supports unlimited capacity per node and up to 2 PB of each storage pools. It could be external storage or HCI storage for private and hybrid cloud, by providing thin provisioning volume, log structured volume or RAW volumes and exporting them over SAN protocol.

Performance

- Polling mode server pool, listening to multiple NICs and ports.

- Delivers full performance of NVMes over the network.

- Linearly scale performance and capacity

- Providing high IOPS and high bandwidth.

- Designed for next generation hardware, kernel-bypass, lock-free model, zero-mem-copy, high CPU cache optimize to provide extreme low latency.

- Parallel and high concurrent I/O technology and high utilization of storage and network resources.

Efficiency

- Supports current and future media workflows

Using standard server, storage and network devices - Single-pane management, providing easy-to-use, all-in-on and centralized WEB management platform.

- Low total cost of ownership (TCO)

Flexibility

- 100% SDS Pool which can manage all disks and make them as pooled with the ability to create arbitrary, dynamic block volumes with unlimited zero-copies snapshot enabled.

- The block interface facilitates easy integration with other legacy file systems.

- Mix different storage devices (NVMe / SSD / HDD) to optimize for cost, scale or performance

Scale out storage and compute as needed. - Fast deployment and scaling of storage resources, and dynamic workload scaling and balancing.

- Supports up to 1024 server nodes and can offer block service to Windows Hyper-V, VMware vSphere, Citrix XenServer or KVM-qemu hypervisor.

- Multiple node and data copies, high availability and auto-recovery.

- Efficient asynchronous replication

- Protocol supports: iSCSI (TCP), iSER (iSCSI Extension for RDMA) and NVMe-oF (NVMe over Fabric).

- Unlimited space-optimized snapshots and clones, periodic or continuous asynchronous long-distance replication.

- High Availability and Remote mirror: All interfaces (iSCSI, iSER, and NVMe-oF) support HA.

- Legacy device support, support for SATA/SAS HDD and SSD.

FlexSDS Release Notes

/in News /by adminRelease Version: FlexSDS 2020 V4, Release Date: 06/03/2020

Release Feature:

- Storage Network: iSCSI, iSER and NVMe over Fabric

- Server Pool: Polling Mode

- Storage Pool: True Scale-out Software Defined Storage Pool

- Snapshots: Unlimited and Zero-Copies Snapshots

- Performance: User mode NVMe end-to-end stack, kernel-bypass, zero copy and zero context switch.

- Replication: High Availability and Replication over RDMA or TCP

- Web Management: Manage whole storage cluster in one platform

FlexSDS released high performance optimized SDS stack for hybrid or all flash arrays

/in News /by adminFlexSDS, the global recognized in high performance software defined storage period, has announced a new release of it’s software stack 2018 for Flash Arrays.

FlexSDS brings its own developed core service which is a high performance optimized, lock-less scheduler (like a OS) to manage all resources in the server includes:

CPU, memory, storage, PCI devices and numa, which is working in polling mode and make full usage of hardware resource to perform maximum performance for storage service.

FlexSDS brings user mode NVMe driver (Kernel-by-pass) to support directly attached, RAID pool and SDS Pool, SDS Pool allows to create multiple volumes to be exposed as high availability, snapshot enabled iSCSI target, iSER target or NVMe-oF target.

Key features:

100% SDS Pool which can manage all disks and make them as pooled with the ability to create arbitrary, dynamic block volumes with unlimited zero-copies snapshot enabled.

Polling mode server pool, listening to multiple NICs and ports.

Protocol Support: iSCSI (TCP), iSER (iSCSI Extension for RDMA) and NVMe-oF (NVMe over Fabric).

High Availability and Remote mirror: All interfaces (iSCSI, iSER, and NVMe-oF) support HA.

Kernel-by-pass, completely kernel-by-pass and zero data copy in I/O path (except latency disk support).

Non-SDS Pool, providing directly attached, RAID (0, 1, 5) mode storage pool.

Legacy device support, support for SATA/SAS HDD and SSD.

Data safety, strong consistency I/Os return back after safely placed in Disks.

Easy Management, providing easy-to-use, all-in-on and centralized WEB management platform.

Almost no limitation to use FlexSDS, FlexSDS can be working on All Flash Array, and as well as working on traditional SATA/SAS arrays, user can use even 1-2 NVMe to get the benefits of the kernel-by-pass performance.

FlexSDS software defined storage is now available for End-Users and OEM partners in all around of the world.

How to install FlexSDS

/in Use Cases /by adminUsing command line iSER DEMO to test iSER target performance

/in Use Cases /by adminFrom the package of us, there are few demo utils to create a single pooled storage to demonstrate storage features and performance. Through the way, you can quick preview the favorite features in minutes.

For iSER target, you may run the following command to start:

Using command line NVMe-oF DEMO to test off-load NVMe-oF target performance

/in Use Cases /by adminFrom the package of us, there are few demo utils to create a single pooled storage to demonstrate storage features and performance. Through the way, you can quick preview your favorite features in minutes.

For NVMe-oF target, you may run the following command to start:

Configure NVMe-oF device with Multipath

/in Use Cases /by adminRequirements:

Linux Kernel 4.8 and newer.

Package: multipath, nvme-cli

Use the ways to install them:

#yum install device-mapper-multipath nvme-cli

Read more

Using Linux nvme-cli to connect to the FlexSDS’s NVMe-oF targets

/in Use Cases /by adminRequirements

To using Linux NVMe over Fabrics client, need Linux with kernel 4.08 and above.

Install the nvme-cli package on the client machine:

#yum install nvme-cli

or on Debian:

#apt-get install nvme-cli

Startup Kernel Module

#modprobe nvme-rdma

Discover NVMe-oF subsystems

nvme discover -t rdma -a 192.168.80.101 -s 4420

Discovery Log Number of Records 1, Generation counter 1 =====Discovery Log Entry 0====== trtype: rdma adrfam: ipv4 subtype: nvme subsystem treq: not specified portid: 1 trsvcid: 4420 subnqn:nqn.2016-12.com.flexsds:all-flash-pool.test_vol0 traddr: 192.168.80.101 rdma_prtype: not specified rdma_qptype: connected rdma_cms: rdma-cm rdma_pkey: 0x0000

Connect to NVMe-oF subsystems

nvme connect -t rdma -n nqn.2016-12.com.flexsds:all-flash-pool.test_vol0 -a 192.168.80.101 -s 4420

List nvme device info:

#nvme list

Node SN Model Namespace Usage Format FW Rev ---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 FlexSDS Controller 1000 GB / 1000 GB 512 B + 0 B A34CCD834CD3544

Disconnect NVMe-oF subsystems

In order to disconnect from the target run the nvme disconnect command:

#nvme disconnect -d /dev/nvme0n1